The landscape of AI video generation has undergone a radical transformation, moving far beyond the era of experimental demos and flickering, short-form clips. Today, the creative industry—spanning professional filmmakers, digital marketers, and independent content studios—is looking for tools that don’t just generate visuals, but actually fit into high-stakes, real-world workflows. In this high-demand environment, the Seedance 2.0 AI Video Generator, developed within the Dreamina ecosystem, has emerged as a new benchmark, establishing itself as the Best sora2 alternative for those who prioritize reliability over randomness.

As a core component of Dreamina—ByteDance’s all-in-one AI creative platform—Seedance 2.0 represents a fundamental shift in the industry’s trajectory. It marks the transition from “fun AI experiments” that produce unpredictable art to controllable, production-ready video creation that meets the rigorous standards of modern media.

The Problem with Traditional AI Video Tools

Despite the hype surrounding early generative models, professional creators still struggle with three core limitations that prevent AI from being a primary tool in production:

- Lack of Granular Control: Most models act as a “black box,” where the user provides a prompt and hopes for the best, with no way to tweak specific elements of the output.

- Inconsistent Assets: Maintaining the same character, object, or artistic style across multiple shots is notoriously difficult, leading to “visual drift” that breaks immersion.

- Fragmented Workflows: Creators often have to jump between five different tools to generate an image, animate it, sync audio, and perform final edits.

When a small detail is off—such as a character’s hair color changing or a background flickering—creators are often forced to regenerate the entire video. This “start-over” cycle not only wastes compute time but also shatters creative momentum. Seedance 2.0 approaches AI video from a fundamentally different angle, treating video editing more like image editing: a process that is precise, iterative, and entirely controllable.

Multimodal Reference: The Real Game Changer

What truly sets Seedance 2.0 apart from its competitors is its Top-tier multimodal reference capability. While many models rely solely on text prompts, Seedance 2.0 allows the AI to “see” and “hear” the user’s intent through real assets. The model can simultaneously reference text, images, videos, and audio, with unprecedented support for up to 12 reference files at once.

This multimodal logic allows creators to guide the AI with a level of clarity that was previously impossible. Instead of writing a 200-word prompt to describe a specific lens movement, a user can simply upload a reference clip of a “dolly zoom.” Seedance doesn’t just “generate” content; it learns from the specific references you provide. It can extract:

- Motion Dynamics: Learning how a character should move or dance from a reference video.

- Cinematography: Replicating complex camera paths and lens effects.

- Editing Rhythm: Borrowing the pacing and “cut” style from existing successful content.

This makes it possible to recreate viral-style formats, adapt brand-specific visual languages, or modify existing footage with surgical precision—all while ensuring the output remains grounded in the user’s original vision.

Editing Video as Easily as an Image

One of Seedance 2.0’s most practical strengths is its ability to perform “direct edits” on existing video assets. This feature realizes the dream of “One-Sentence Video Editing,” where changing a video is as intuitive as using a brush in Photoshop.

Through natural language and image references, users can replace elements, remove unwanted objects, add new components, or perform complex style transfers within a video. Crucially, the AI preserves the multi-angle consistency of the main subject. If you change a character’s clothing in a 360-degree pan, the new outfit stays perfectly mapped to the body from every angle. This “edit instead of recreate” approach dramatically reduces production costs and iteration cycles, allowing teams to meet tight deadlines without sacrificing quality.

Control Is the New Quality

In the professional world, visual beauty is secondary to consistency. Seedance 2.0 excels in controllability, delivering industry-leading stability across characters, objects, and compositions.

A major upgrade in this version is the Font Consistency feature. Branded content requires stable, accurate typography, yet AI has historically struggled to render text without jittering. Seedance 2.0 ensures that font styles and stylized overlays remain faithful across scenes. This precision extends to “Human and Object Consistency,” accurately restoring facial features, vocal timbre (for lip-sync), and intricate framing details from reference materials. Whether it’s a fast-paced edit or a slow cinematic shot, the transitions feel intentional and the visuals remain coherent, making it the ideal choice for brands where visual integrity is non-negotiable.

Cinematic Output with Physical Realism

Seedance 2.0 also raises the bar for output quality by improving the AI’s understanding of physical laws. Motion no longer feels artificial; shadows, light diffusion, and gravity-based interactions now feel natural and grounded.

The model supports Intelligent Continuation, a feature that allows creators to “keep filming” a scene rather than starting a new one. This “Smart Storytelling” capability ensures that narrative flow and visual logic remain unbroken across multiple shots. Combined with film-level high-definition rendering and advanced multi-shot narrative generation, the output is ready for professional screens.

Furthermore, the Audio-Visual Synchronization has been significantly optimized. Seedance 2.0 supports both single and multi-person lip-sync, generating character speech and environmental ambient sound in perfect harmony with the visual action. This creates an immersive experience where the sound and sight are synthesized as one, rather than being patched together in post-production.

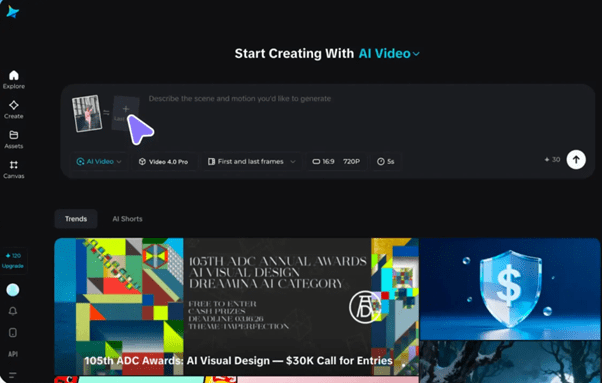

A Complete Creative Workflow: The Power of One

What truly separates Seedance 2.0 from being “just another tool” is its home within the broader Dreamina ecosystem. It is designed as part of a unified creative workflow. By integrating the latest Seedream 5.0 image generation model and specialized AI Agents, Dreamina provides a total solution.

A creator can move from a conceptual sketch to a high-fidelity image, and then use that image as a reference for a cinematic video—all within a single browser-based platform. There is no need to jump between different tools or manage complex file exports. This end-to-end approach addresses the “Workflow Gap” in AI creation, allowing creators to focus on their ideas rather than technical workarounds.

Conclusion: Precision Meets Imagination

As AI technology continues to mature, the focus is shifting away from “what the AI wants to show us” toward “what we want the AI to build for us.” Seedance 2.0 stands at the forefront of this shift. By offering Top-1 multimodal reference, surgical editing control, and deep narrative consistency, it provides the most practical and powerful production environment available today.

For creators, agencies, and studios seeking a production-ready solution that balances creative freedom with professional-grade precision, Seedance 2.0 represents the new industry standard. It is the bridge between inspiration and final cut, ensuring that every frame produced is exactly as intended.